Artificial intelligence, often called AI, is part of our daily lives in ways we might not even realize. It can suggest movies to watch, screen job applications, or help doctors spot illnesses. These tools are powerful, but they are not perfect. In fact, sometimes they make unfair or biased decisions.

You may have seen news stories about facial recognition struggling with certain faces, or hiring tools that favor men over women. These are examples of AI bias, and they can have serious effects on people’s lives. The good news is, you do not need to be a computer expert to understand why this happens.

In this article, we’ll explore what bias in AI means, how it happens, and why it matters to all of us.

Table of Contents

Key Takeaways

- AI learns from data created by humans, which can include our flaws and prejudices.

- If the data is biased or incomplete, the AI can repeat unfair patterns.

- Examples include hiring tools, credit scoring, and facial recognition mistakes.

- Understanding AI bias helps us spot problems and ask for fairer technology.

How AI Learns: The Basics

Think of AI as a student. Instead of learning from teachers and books, it studies huge amounts of data. This data might include résumés from past job applicants, thousands of photos of people’s faces, or financial histories.

By looking for patterns, the AI tries to “learn” what a good job candidate looks like, or how to tell one person apart from another.

For example:

- A hiring AI might look at thousands of past job applications and notice which applicants were chosen.

- A medical AI might review millions of X-rays to learn how to spot signs of disease.

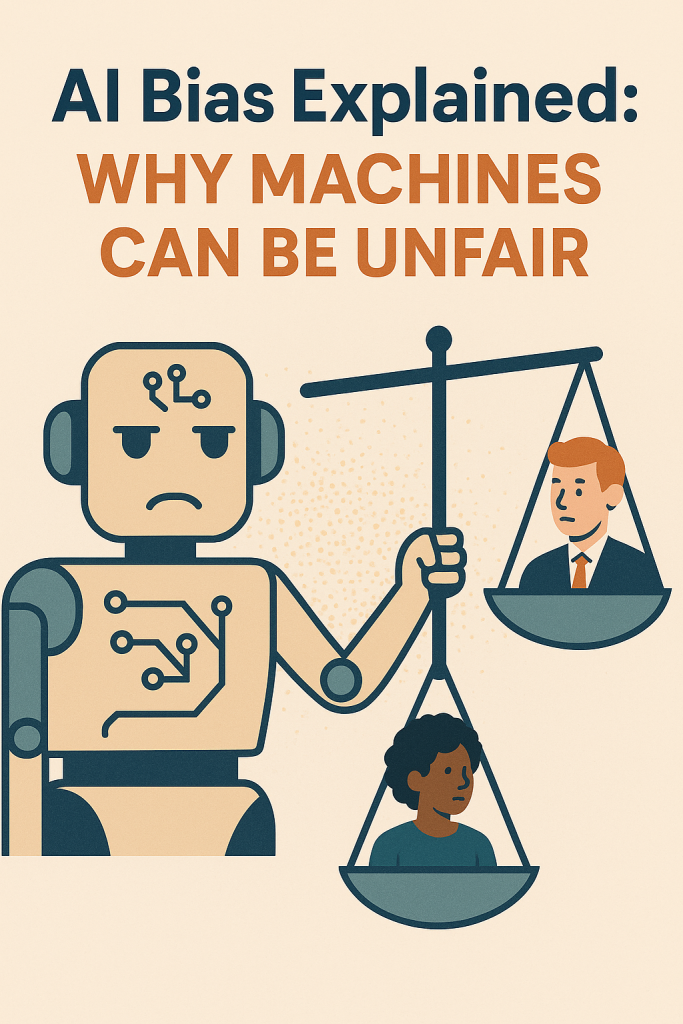

Here’s the important part: AI does not think for itself. It only knows what it has been shown. If the data it studies is unfair or incomplete, the AI’s decisions will also be unfair.

Where Does Bias Come From?

AI bias does not come out of thin air. It usually comes from three main sources:

- Biased Data

If the information used to train an AI reflects unfair patterns, the AI will copy them. For example, if a company mostly hired men for technical roles in the past, an AI trained on those records might learn to favor men’s résumés, even if women are equally qualified. - Missing Data

Sometimes certain groups are not represented well in the data. For example, many facial recognition systems were trained mostly on lighter-skinned faces. As a result, they made more mistakes when identifying people with darker skin tones. - Design Choices

The people who build AI decide which data to include, which features to focus on, and how the system should behave. Even without meaning to, those choices can create bias.

Everyday Examples of AI Bias

- Facial Recognition Problems

Research has shown that facial recognition software can be very accurate for some groups of people but far less accurate for others. For example, it has performed best on white male faces but struggled with women and people of color. This can be dangerous if the technology is used in policing, since a wrong match could lead to someone being accused unfairly. - Unfair Hiring Tools

Some companies use AI to sort through résumés or even to judge video interviews. In one case, an AI tool learned from a company’s past hiring decisions that favored men. As a result, it gave higher scores to male candidates and lower scores to women, even when their qualifications were the same. - Credit and Lending Decisions

Financial institutions sometimes use AI to help decide who gets approved for loans or credit cards. But if the AI relies on biased historical data—like credit records tied to certain neighborhoods—it may unfairly deny people who are financially responsible, simply because they live in the “wrong” area.

Why AI Bias Matters

At first, these examples might sound like small glitches. But the truth is, they can have very real consequences.

- Imagine being turned down for a job because a machine favored applicants who looked like the company’s past hires.

- Imagine being denied a loan not because of your income or credit score, but because of a biased pattern hidden in the system.

- Imagine being wrongly identified by facial recognition, leading to a false police report.

These issues are about more than technology. They affect people’s rights, opportunities, and everyday lives. That’s why it is so important to recognize and fix AI bias.

Can AI Be Made Fairer?

The good news is that people around the world are working hard to make AI more fair and transparent. Some of the steps include:

- Better Data Collection

Making sure the information used to train AI represents many groups of people fairly, instead of only a small portion. - Regular Testing

Running checks to see if an AI treats different groups equally. For example, does it give the same accuracy for women as it does for men? - Transparency and Accountability

Asking companies to explain how their AI systems work, what data they use, and how decisions are made. - Human Oversight

Making sure important decisions, such as hiring or lending, are not made by AI alone. A human should always have the final say.

While no system can ever be completely free of bias, these steps can greatly reduce the risk. The more aware we are, the more we can push for fair practices.

What You Can Do as a User

Even if you are not building AI systems yourself, there are ways to stay informed and protect yourself:

- Be Curious: If you hear about a company using AI in hiring or lending, ask how it works and whether it has been tested for fairness.

- Read the News: Keep an eye out for stories about AI bias. These can help you understand where problems are showing up.

- Support Transparency: When companies are open about their AI, it builds trust. Look for organizations that value fairness and accountability.

Final Thoughts

AI is powerful, but it is not perfect. It learns from us, and sometimes it learns our flaws too. When we see unfair results in technology, it is often because the system was copying patterns from the past.

By understanding AI bias, you can make sense of why machines sometimes seem unfair and why it matters to challenge those systems. The more we talk about these issues, the more likely it is that AI will be built in ways that are fair and useful for everyone.

Technology should help people, not hold them back—and with awareness, we can all play a part in making that happen.